The evolution of AI chatbots has reached a critical inflection point with Retrieval-Augmented Generation (RAG) systems delivering dramatically better results than their traditional counterparts. These context-aware AI agents are proving to be game-changers, with businesses implementing human-like AI personas reporting conversion rates up to three times higher than those using conventional rule-based chatbots. This performance gap isn’t just marginal—it represents a fundamental shift in how businesses can leverage AI for customer engagement.

Understanding the Fundamental Difference

Traditional chatbots operate on predefined rules and decision trees. They follow rigid pathways programmed by developers, recognizing specific keywords or phrases to trigger predetermined responses. While efficient for handling straightforward queries, these systems quickly reach their limits when conversations become nuanced or deviate from expected patterns.

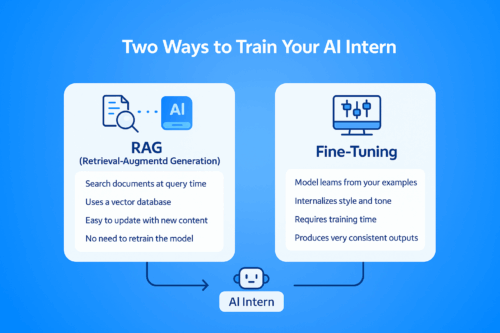

RAG chatbots, by contrast, combine the power of large language models with the ability to retrieve and reference specific information. This architecture allows them to:

- Access and incorporate relevant data in real-time

- Maintain context throughout complex conversations

- Provide accurate, data-backed responses

- Learn and improve from interactions

The Technical Architecture That Makes RAG Superior

RAG systems employ a sophisticated two-stage process that fundamentally transforms chatbot capabilities:

1. Retrieval Component

When a user query arrives, the RAG system first searches through its knowledge base to find relevant information. This knowledge base can include:

- Company documentation

- Product specifications

- Previous customer interactions

- Up-to-date market information

The retrieval mechanism uses semantic search rather than simple keyword matching, understanding the intent behind queries to pull truly relevant information.

2. Generation Component

Once relevant information is retrieved, the large language model generates a response that incorporates this specific knowledge while maintaining conversational fluency. This approach combines the factual accuracy of retrieved information with the natural language capabilities of modern AI models.

This architecture enables sophisticated AI agent infrastructure that can handle complex customer journeys that would confound traditional systems.

Why RAG Chatbots Achieve 3x Higher Conversion Rates

The dramatic improvement in conversion rates isn’t coincidental—it’s the direct result of several key advantages:

Contextual Understanding Drives Personalization

RAG chatbots maintain conversation history and context, allowing them to provide truly personalized experiences. Rather than treating each interaction as isolated, they build a comprehensive understanding of customer needs throughout the conversation.

This contextual awareness enables them to offer solutions that precisely match customer requirements, significantly increasing the likelihood of conversion. The ability to personalize at scale creates experiences that feel tailored to each individual customer.

Reduced Friction in the Customer Journey

Traditional chatbots often force customers into rigid conversational paths, creating frustration when their queries don’t fit predefined patterns. RAG systems adapt to the customer’s communication style and needs, dramatically reducing friction points that lead to abandonment.

By maintaining context throughout interactions, these systems eliminate the need for customers to repeat information or navigate complicated menu trees, creating a smoother path to conversion.

Enhanced Problem-Solving Capabilities

When customers encounter obstacles in their journey, traditional chatbots frequently hit dead ends, unable to address unique scenarios. RAG chatbots can:

- Understand complex, multi-part questions

- Provide nuanced answers that address specific concerns

- Offer creative solutions by combining different knowledge sources

- Handle exceptions without defaulting to human escalation

This problem-solving capability keeps customers engaged in the conversion funnel rather than abandoning due to unresolved issues.

Data-Driven Recommendations

RAG chatbots leverage their access to comprehensive knowledge bases to make highly relevant product or service recommendations. Unlike traditional systems that might offer generic suggestions based on simple rules, RAG chatbots can:

- Analyze stated and implied customer needs

- Match these needs with specific product features

- Provide evidence-based comparisons between options

- Anticipate objections and proactively address them

This data-driven approach leads to recommendations that customers perceive as genuinely helpful rather than pushy sales tactics.

Real-World Implementation Challenges

Despite their clear advantages, implementing RAG chatbots comes with challenges:

Knowledge Base Management

The effectiveness of a RAG system depends heavily on the quality and organization of its knowledge base. Companies must invest in:

- Comprehensive documentation of products, services, and policies

- Regular updates to ensure information remains current

- Proper structuring of information for efficient retrieval

- Quality control processes to prevent inaccuracies

Integration Complexity

RAG systems require more sophisticated integration with existing business systems compared to traditional chatbots. Companies need to connect their RAG implementation with:

- CRM systems to access customer history

- Product databases for accurate information

- Order management systems for transaction processing

- Analytics platforms for performance tracking

Training Requirements

While RAG systems reduce the need for extensive pre-programming of responses, they still require initial training to optimize performance. This includes:

- Fine-tuning the retrieval mechanism for relevant information selection

- Adjusting response generation parameters for brand voice consistency

- Creating fallback mechanisms for edge cases

Companies looking to implement domain-specific agents should consider proper AI training methodologies to maximize effectiveness.

Measuring ROI: Beyond Conversion Rates

While the 3x improvement in conversion rates is compelling, the ROI of RAG chatbots extends to multiple business metrics:

Customer Satisfaction Metrics

Companies implementing RAG chatbots typically see significant improvements in:

- Net Promoter Scores (NPS)

- Customer Satisfaction (CSAT) ratings

- Reduced complaint volumes

- Positive sentiment in feedback

Operational Efficiency

RAG systems deliver operational benefits including:

- Lower escalation rates to human agents

- Reduced average handling time

- Increased first-contact resolution rates

- Ability to handle higher interaction volumes

Long-Term Customer Value

The improved customer experience provided by RAG chatbots contributes to:

- Higher customer retention rates

- Increased repeat purchase frequency

- Larger average order values

- More positive word-of-mouth and referrals

Key Takeaways

- RAG chatbots leverage retrieval-augmented generation to provide contextually relevant, accurate responses that traditional chatbots cannot match.

- The 3x improvement in conversion rates stems from enhanced personalization, reduced friction, superior problem-solving, and data-driven recommendations.

- Implementing RAG systems requires investment in knowledge base management, integration capabilities, and proper training.

- ROI extends beyond conversion rates to include improved customer satisfaction, operational efficiency, and long-term customer value.

- As AI technology continues to evolve, the gap between RAG and traditional chatbots is likely to widen further.

Conclusion

The shift from traditional rule-based chatbots to context-aware RAG systems represents a quantum leap in customer engagement capabilities. With conversion rates three times higher than conventional approaches, RAG chatbots deliver compelling ROI while simultaneously improving customer experience across multiple dimensions.

As businesses compete for customer attention in increasingly crowded digital spaces, the ability to provide intelligent, contextual, and helpful automated interactions will become a critical competitive advantage. Organizations that invest in RAG technology now will establish a significant lead over those relying on increasingly outdated rule-based systems.